I plan to have some updates around March. Since summer I have been in a period of extreme optimization/rearchitecturing for impressively small EXE size, low memory use, low CPU use, and efficient compute-shader-based GPU rasterization methods, with initial focus on 2D-only-at-first rendering of generic geospatial data. I did the "flashy" part first (the demo 3D engine) to prove ability, get excitement going (including my own), and set a goal for reimplementation in the performance-optimized final product. Basically, September 2023 to June 2024 was visual (groundwork, to be reused later), and since then has been technical/non-visual work (mostly). Then in the past 2 months I have created (and am finishing) an optimized D3D12 renderer - basically a month of work just to make my current simple progress on 2D multi-map tiled raster data display future-ready, and without forward progress.

I will be switching to content and actual rendering engine components soon, once I have perfected the basis of a modern, optimized graphics application to the furthest extreme reasonably possible. I am getting close, but now that I finally have a modern professional-grade Direct3D 12 renderer (written in pure Pascal/Win32 API calls and not using external D3D12 engine libraries), there are some further refactorings I can to do take advantage of that, and enable future techniques that will offer even more power savings and optimum usage of hardware.

The boring work is the most exciting to me, because everything is so efficient and stable that it will be very impressive once all the content is slowly built back in using this ultra-optimized modern GPU rendering engine.

I am designing the whole product to use GPU compute shaders to do rasterization work of multiple datasets in single draw calls. This will allow for all sorts of optimizations (like not having to render background pixels if opaque radar data is on top) as well as custom blend modes which enable very rich graphics. A lot of the improved graphical appearance will come from heavy use of real-time convolutions (distance fields, blur, glow, shadow, etc), which I have also been performance-optimizing a general method for without actually implementing beyond a basic UI effect.

The next-gen WSV3 will use deferred rendering extensively. This should decrease GPU use during loop and enable even more rich graphical effects at real-time framerates. I also haven't started another significant and necessary innovation I know I can do a great job with, which is a GPU-based timeseries raster data memory compression algorithm that can also be related to the transmission format.

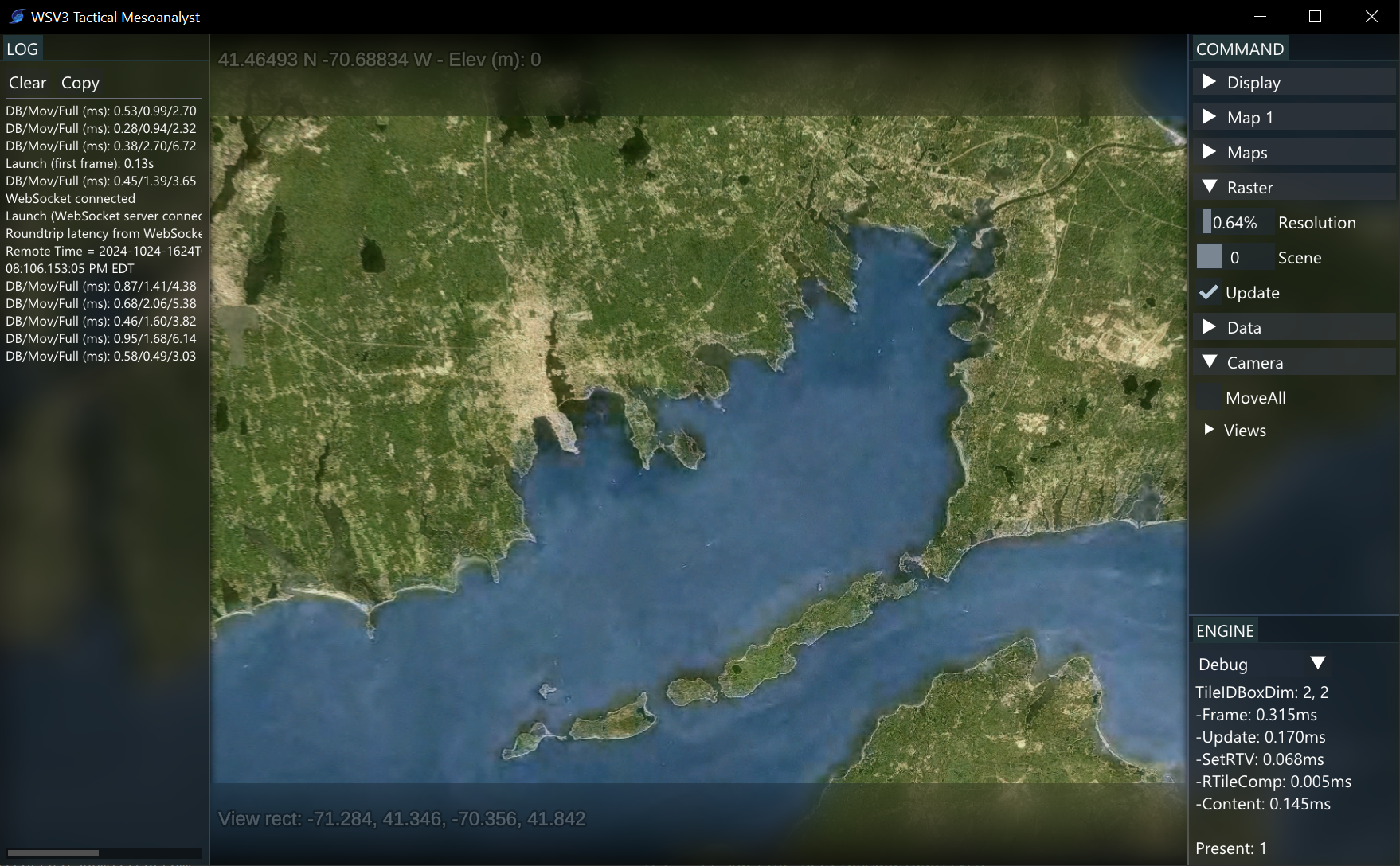

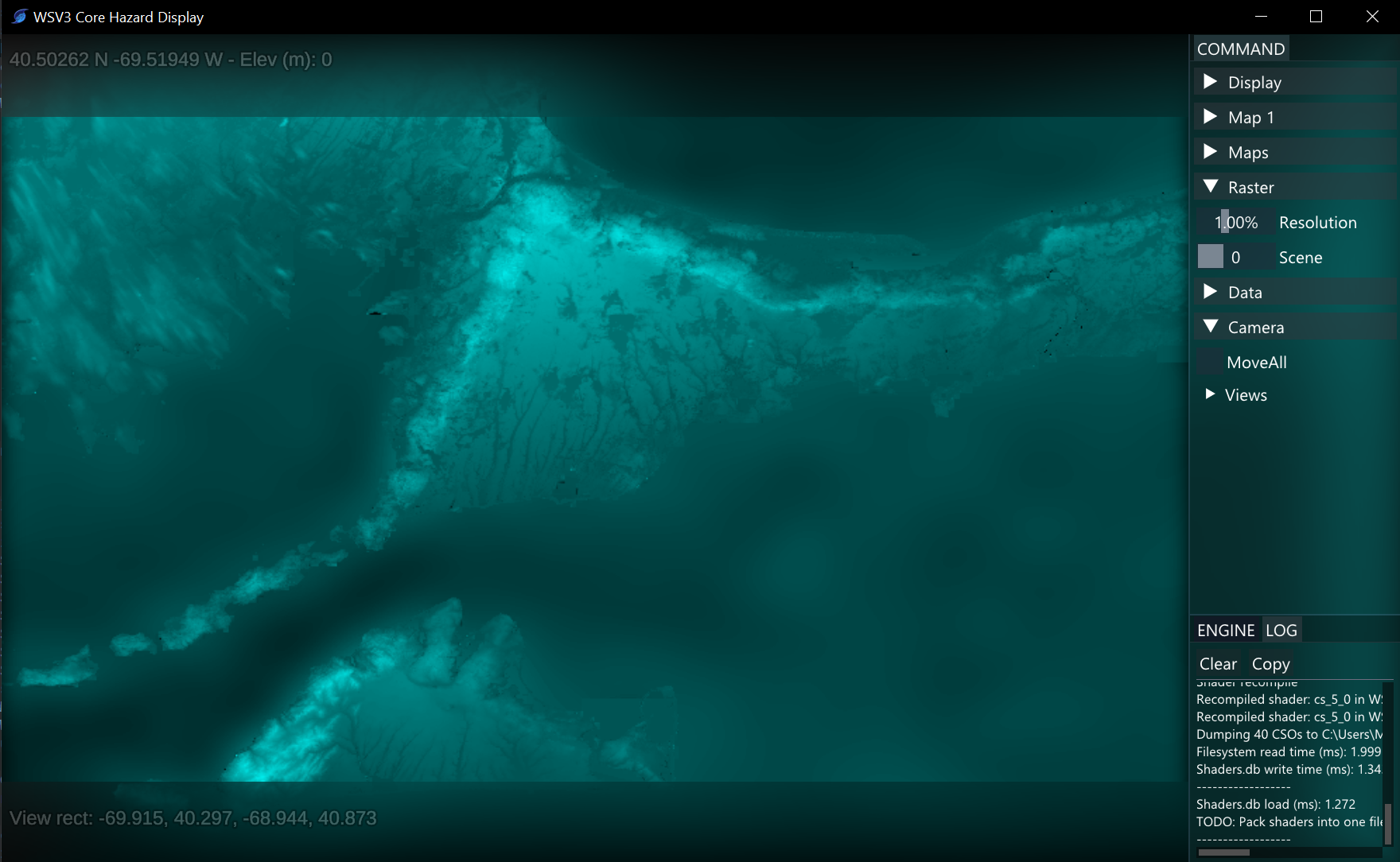

Lots more "invisible" work but we do hope to soft-release an extremely simple, no-customization, 2D-only "WSV3 Core Hazard Display" (or the like) cut-down lower-price version in the summer. This will help test the new seamless VM-supporting floating licensing system and the new performant real-time server data ingest/streaming infrastructure before the major release in a year from now of WSV3 Tactical Mesoanalyst, with 3D terrain, 8x multi-maps, and everything else.

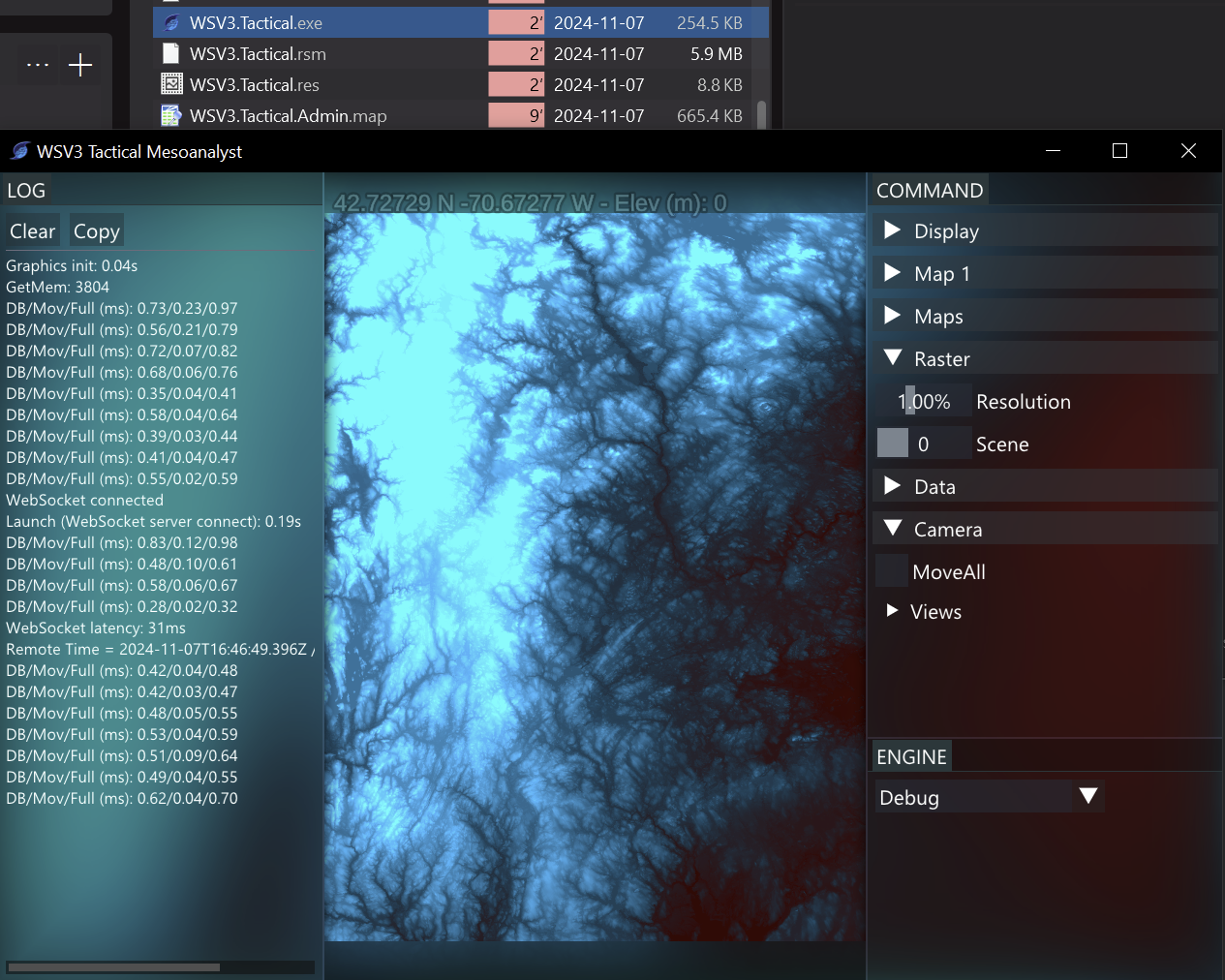

Here are some recent diagnostic screenshots showing working with basic elevation/imagery data while developing the less graphically impressive/under-the-hood optimization aspects. Check out the EXE file size 🙂